OpenTelemetry 101 - A Practical Guide to Unified Observability

Modern systems are distributed across clouds, containers, and serverless functions, offering scalability but also adding complexity. When a payment API slows down or a cloud bill suddenly spikes, teams need more than basic uptime checks to diagnose the problem.

They need observability - the ability to understand why a system behaves the way it does by analyzing traces, metrics, and logs.

Traditional monitoring tools fall short here. They focus on predefined thresholds (e.g., CPU usage > 90%) but can’t answer questions like, “Why did latency increase for users in Europe at 3 PM?” or “Which microservice is driving up our AWS bill?” Observability fills this gap by letting you ask arbitrary questions of your system, using telemetry data as your guide.

For DevOps and SRE teams, this means faster troubleshooting. For FinOps professionals, it means linking infrastructure costs to specific services or teams.

But here’s the catch! Observability requires actionable telemetry data, and legacy tools often silo this data into vendor-specific formats. This is where OpenTelemetry (OTel) comes in—a vendor-neutral, open-source framework that standardizes how you generate, collect, and export telemetry.

Instead of relying on fragmented, proprietary solutions, teams can use OTel to instrument their code once and send data to any backend such as Prometheus, Jaeger or custom solutions.

This guide will walk you through how OpenTelemetry works, why it’s essential for modern engineering teams, and how to get started implementing it.

Observability in modern cloud-native systems relies on high-quality, actionable telemetry data, but collecting this data efficiently has long been a challenge.

Until very recently, organizations have relied on vendor-specific observability tools, each with its own instrumentation methods and data formats. For example, using the Prometheus client library allows teams to instrument metrics, but only for Prometheus, limiting flexibility.

This has led to fragmented observability pipelines, vendor lock-in, and operational inefficiencies, forcing teams to juggle different agents, SDKs, and query languages for each tool they use.

OpenTelemetry (OTel) solves this by providing a vendor-neutral, open-source framework for generating, collecting, and exporting telemetry data (traces, metrics, and logs). Instead of instrumenting services separately for different observability tools, OTel allows teams to instrument once and send data anywhere—whether it’s Prometheus, Jaeger, or a custom backend.

As a CNCF incubating project, OpenTelemetry is designed to standardize observability across any language, infrastructure, or cloud provider. It eliminates the complexity of managing multiple instrumentation methods and gives teams full control over their telemetry data.

At its core, OpenTelemetry is built on three main components:

With OpenTelemetry, telemetry data is no longer tied to a single tool—it’s a portable, flexible, and scalable foundation for modern observability.

Modern observability isn’t just about collecting logs, metrics, and traces—it’s about making that data actionable. If raw telemetry lacks structure or context, it becomes difficult to correlate events and troubleshoot issues effectively.

OpenTelemetry enhances telemetry data by standardizing formats, ensuring seamless correlation, and propagating the “request context” across services.

OpenTelemetry captures four key telemetry signals, allowing teams to instrument once and analyze across multiple backends without vendor lock-in:

By handling these signals consistently, OpenTelemetry ensures telemetry data is structured, portable, and vendor-agnostic.

In distributed systems, a single request often jumps between multiple services. Without a way to track that request flow, debugging is painful—logs appear disconnected, and traces don’t tell the full story.

OpenTelemetry solves this with context propagation i.e. automatically passing trace context across microservices. This means engineers can reconstruct an entire request flow, even if it passes through multiple layers like APIs, databases, and messaging queues.

OTel follows the W3C Trace Context standard (native support), which allows trace IDs to be passed along HTTP calls, message queues, or background jobs. This gives engineers a clear, end-to-end picture of how requests behave across an entire system.

By unifying telemetry signals and built-in context propagation, OpenTelemetry makes observability not just about collecting data—but about truly understanding system behavior!

Observability in modern systems is fragmented. Engineering teams often rely on multiple tools, each with its own way of collecting and storing telemetry data. This lack of standardization creates operational overhead, slows down incident response, and makes it difficult to gain a unified view of system performance.

OpenTelemetry (OTel) addresses this by establishing a single, vendor-neutral standard for telemetry collection. Instead of being locked into proprietary observability tools, teams can instrument their systems once and export data anywhere.

But beyond standardization, OpenTelemetry provides practical benefits that improve both engineering workflows and business operations.

Most observability tools require proprietary SDKs and agents, making it difficult to switch platforms without extensive re-instrumentation. This leads to vendor lock-in, high switching costs, and operational inefficiencies.

OpenTelemetry solves this by allowing teams to instrument once and export telemetry data to any backend. Whether an organization is using Prometheus today or considering any other solution tomorrow, OpenTelemetry ensures that telemetry data remains portable and adaptable.

Engineers no longer need to manage multiple SDKs, and businesses avoid the costs and complexity of tightly coupled observability stacks.

One of the biggest challenges in observability is rising data storage and processing costs. Logs, traces, and metrics can generate massive amounts of telemetry data, much of which may be redundant or unnecessary. Without smart data collection and filtering mechanisms, teams end up paying for more data than they actually need.

OpenTelemetry helps optimize observability costs by applying sampling and processing before data reaches the backend. This reduces the volume of stored telemetry data while retaining critical insights for debugging and performance monitoring.

For example, a company processing millions of transactions per day may choose to retain full traces for failed checkouts but only sample a small percentage of successful ones.

With OpenTelemetry, teams can retain critical debugging data while reducing unnecessary storage costs—ensuring cost-effective observability.

Another major challenge in observability is the lack of consistency in how telemetry data is collected and formatted across teams and services. Without standardization, different services may use inconsistent logging formats, metric names, and tracing conventions, making system-wide analysis complex and inefficient.

OpenTelemetry provides a standardized instrumentation framework that ensures all traces, metrics, and logs follow a consistent format across an organization. With predefined semantic conventions, engineers can align telemetry data with industry best practices, making it easier to correlate and analyze it later.

Additionally, OpenTelemetry’s extensible architecture allows teams to customize their instrumentation while maintaining a unified data model. Whether automatically instrumenting common web frameworks or defining custom metrics for business-specific use cases, teams can adapt OpenTelemetry to their needs without sacrificing standardization.

This structured approach reduces implementation complexity, enhances collaboration between teams, and ensures that telemetry data remains meaningful and actionable throughout the observability pipeline.

The OTel framework comprises several components, each playing a crucial role in the collection, processing, and exporting of telemetry data.

At the core of OpenTelemetry is the OpenTelemetry Specification, which defines the standards for collecting, processing, and exporting telemetry data across different programming languages and observability tools.

This ensures that instrumentation remains consistent, portable, and vendor-neutral. The specification is divided into three main components:

By following this specification, OpenTelemetry guarantees that telemetry data remains portable, scalable, and future-proof, making it easier for engineers to integrate observability seamlessly into their workflows.

Before you can analyze telemetry data, you first need to instrument your application—this means adding the right hooks to capture traces, metrics, and logs as requests flow through your system. This is where OpenTelemetry’s APIs and SDKs come in.

Engineers can use these to build a reliable telemetry pipeline without having to reinvent the wheel for each service.

Note:

OpenTelemetry supports auto-instrumentation, which enables teams to collect telemetry data with minimal or no changes to application source code. This is particularly useful for legacy systems or third-party dependencies where modifying the codebase is not feasible.

Once telemetry data is collected, it needs to be processed and sent to an observability backend. But instead of letting every service communicate directly with multiple tools, OpenTelemetry introduces the Collector—a central hub that receives, processes, and exports telemetry data efficiently.

The Collector operates in three key stages:

By decoupling instrumentation from observability tools, the Collector provides greater flexibility and scalability, ensuring teams can switch monitoring solutions without reconfiguring every service.

Example: If your company wants to migrate from Prometheus to Randoli Observability, OpenTelemetry makes it possible without touching application code—simply reconfigure the Collector to send data to the new backend.

Note: Why is this important?

The Collector is particularly useful in large-scale Kubernetes environments, where thousands of services generate telemetry data. Instead of each service managing its own exporters, the Collector centralizes processing and optimization, making observability more cost-efficient and manageable.

The OpenTelemetry Collector consists of a single binary which you can use in different ways, for different use cases. Refer to the documentation to know more about various deployment patterns available for the OTel collector.

One of the biggest challenges in observability is the inconsistency in how different tools represent telemetry data.

For example, metric types such as Summary, Histogram, and Counter may be defined differently across various monitoring systems, making it difficult to correlate data across platforms. Similarly, traces and logs often follow proprietary formats, leading to vendor lock-in and operational overhead when integrating multiple observability backends.

OpenTelemetry solves this with OTLP (OpenTelemetry Protocol)—a vendor-neutral standard for transmitting telemetry data. It provides a unified way to export logs, traces, and metrics, ensuring that observability pipelines remain portable and interoperable across tools.

By adopting OTLP in production, teams can eliminate the need for custom adapters when integrating different observability tools. This simplifies telemetry pipelines, improves data consistency, and ensures long-term scalability without any vendor constraints.

When managing dozens or even hundreds of microservices in Kubernetes, manually configuring OpenTelemetry Collectors, SDKs, and exporters can become a huge operational burden. The OpenTelemetry Kubernetes Operator simplifies this by automating the management of collectors and auto-instrumentation of the workload using OpenTelemetry instrumentation libraries.

Some of the benefits of using the Kubernetes operator are as follows:

For instance, if a DevOps team needs tracing data across multiple Kubernetes namespaces, the OTel Kubernetes Operator ensures that every namespace automatically gets the correct OpenTelemetry configuration, removing manual intervention and improving efficiency.

Note: The OpenTelemetry Demo

Bringing all these components together, the OpenTelemetry Demo simulates a real-world distributed system, showcasing how applications can instrument traces, metrics, and logs, route them through the OpenTelemetry Collector, and export them to various supported observability backends such as Jaeger, Prometheus or vendor-specific backends.

Whether you're new to OpenTelemetry or looking to refine your implementation, exploring this demo provides valuable insights into setting up a robust observability pipeline.

Check out the demo to learn more!

In this section, we'll walk through instrumenting a real-world Node.js microservice to collect traces and metrics, export them to an OpenTelemetry Collector, and visualize them using Jaeger and Prometheus.

We’ll build a simple checkout API that processes payments. Some requests will randomly fail to simulate real-world transaction errors.

First, let us initialize the project and install the required dependencies:

mkdir otel-demo && cd otel-demo

npm init -y

npm install express

Then, create an app.js file with the following code:

const express = require("express");

const app = express();

const port = 3000;

app.use(express.json());

app.post("/checkout", (req, res) => {

// Simulate payment processing

const paymentSuccess = Math.random() > 0.2; // 80% success rate

if (paymentSuccess) {

res.status(200).send("Payment processed!");

} else {

res.status(500).send("Payment failed!");

}

});

app.listen(port, () => {

console.log(`Checkout API running on port http://localhost:${port}`);

});

At this point, the API works, but there’s no visibility into request execution. We don’t know how long transactions take or where failures originate.

To enable OpenTelemetry, we need to initialize tracing and metrics collection. Let us first install the necessary OpenTelemetry dependencies:

npm install @opentelemetry/sdk-node \

@opentelemetry/auto-instrumentations-node \

@opentelemetry/exporter-trace-otlp-proto \

@opentelemetry/exporter-metrics-otlp-proto

The following instrumentation code initializes OpenTelemetry and configures it to send telemetry data to the OpenTelemetry Collector (tracing.js).

const { NodeSDK } = require("@opentelemetry/sdk-node");

const { getNodeAutoInstrumentations } = require("@opentelemetry/auto-instrumentations-node");

const { OTLPTraceExporter } = require("@opentelemetry/exporter-trace-otlp-proto");

const { OTLPMetricExporter } = require("@opentelemetry/exporter-metrics-otlp-proto");

const { PeriodicExportingMetricReader } = require("@opentelemetry/sdk-metrics");

const sdk = new NodeSDK({

traceExporter: new OTLPTraceExporter({ url: "http://otel-collector:4318/v1/traces" }),

metricReader: new PeriodicExportingMetricReader({

exporter: new OTLPMetricExporter({ url: "http://otel-collector:4318/v1/metrics" }),

}),

instrumentations: [getNodeAutoInstrumentations()],

});

sdk.start();

console.log("✅ OpenTelemetry SDK started successfully"); // optional statement

In this setup, we’re:

To test the instrumentation API, use the following command:

node -r ./tracing.js app.js

Now, every request generates traces and metrics that will be processed by the OpenTelemetry Collector.

The OpenTelemetry Collector will act as an intermediary between our application and observability backends (in this case, Jaeger and Prometheus).

Note: OTel Collector Deployment Patterns

OpenTelemetry supports multiple Collector deployment patterns, which define how the OTel Collector is integrated into an infrastructure to optimize scalability, latency, and processing efficiency.

In this example, the OTel configuration follows the agent deployment pattern where the Collector service runs alongside the application and collects telemetry data before forwarding it to the observability backends (in this case, Jaeger and Prometheus).

In production, this would typically involve deploying the Collector as a sidecar within each service pod (for Kubernetes) or as a lightweight daemon on individual hosts. This reduces network latency and processes telemetry locally before sending it to a centralized observability system.

Use the following YAML configuration for the OTel collector:

receivers:

otlp:

protocols:

http:

endpoint: "0.0.0.0:4318"

exporters:

otlp:

endpoint: "jaeger:4317" # Send traces to Jaeger using OTLP gRPC

tls:

insecure: true

prometheus:

endpoint: "0.0.0.0:9090"

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlp] # Sending traces via OTLP to Jaeger

metrics:

receivers: [otlp]

exporters: [prometheus]

This configuration:

Note:

The OpenTelemetry Collector does not have a built-in jaeger exporter. To send traces to Jaeger, we must use the built-in OTLP exporter and configure it to point to Jaeger’s OTLP-compatible endpoint which is -jaeger:4317.

Prometheus needs to scrape metrics from the OpenTelemetry Collector periodically. To do this, we define a Prometheus configuration file (prometheus.yaml):

global:

scrape_interval: 15s

scrape_configs:

- job_name: "otel-metrics"

static_configs:

- targets: ["otel-collector:9090"] # Scrape Collector’s metrics

Note: Prometheus Integration Considerations

In this implementation, Prometheus scrapes metrics from a single OTel Collector instance using a pull-based (scrape) approach.

While this works well in simpler deployments, in setups with multiple collectors, a push-based approach may be required where the OTel collector pushes the relevant metrics to Prometheus or any other supported backends.

The latest versions of Prometheus now support OpenTelemetry data formats natively, allowing OTel Collectors to push metrics directly to Prometheus instead of relying solely on scraping.

If scaling beyond a single collector, this is something to consider for better efficiency.

Instead of running each service manually, we use Docker Compose to run our API, OpenTelemetry Collector, Jaeger, and Prometheus in a controlled environment.

Use the following YAML configuration for the docker compose (docker-compose.yaml):

version: "3.8"

services:

app:

build: .

environment:

OTEL_EXPORTER_OTLP_ENDPOINT: "http://otel-collector:4318"

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT: "http://otel-collector:4318/v1/traces"

OTEL_EXPORTER_OTLP_METRICS_ENDPOINT: "http://otel-collector:4318/v1/metrics"

OTEL_RESOURCE_ATTRIBUTES: "service.name=checkout-api"

ports:

- 3000:3000

otel-collector:

image: otel/opentelemetry-collector

command: --config=/etc/otel-config.yaml

volumes:

- ./otel-collector-config.yaml:/etc/otel-config.yaml

ports:

- 4318:4318

jaeger:

image: jaegertracing/all-in-one:latest

ports:

- 16686:16686 # Jaeger UI

- 4317:4317 # gRPC receiver for OTLP

- 14250:14250 # Jaeger Collector OTLP gRPC

prometheus:

image: prom/prometheus

volumes:

- ./prometheus.yaml:/etc/prometheus/prometheus.yml # Add this line

ports:

- 9090:9090

Start all the services using the following:

docker compose up --build

Let us generate some traffic to our API. Use the following to make some POST requests to the checkout API:

curl -X POST http://localhost:3000/checkout

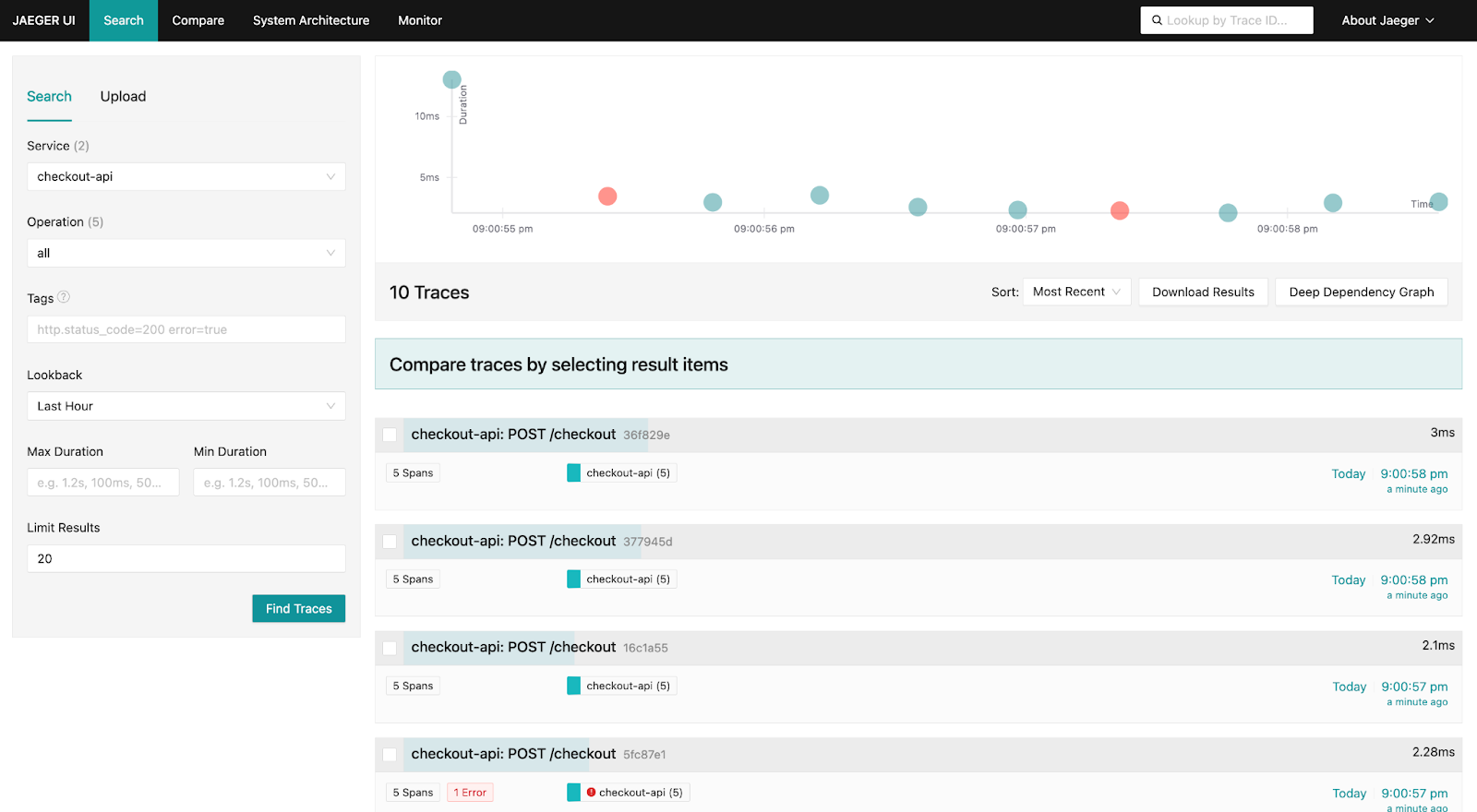

Visit the Jaeger dashboard at http://localhost:16686 to view all the traces related to the checkout-api service.

Visit the prometheus UI at http://localhost:9090 and query relevant metrics to analyze latency and request rates.

At Randoli, we’re empowering engineers to make sense of their telemetry data. By combining OpenTelemetry with eBPF, we give teams deep visibility into their Kubernetes environments—without the complexity of vendor lock-in.

By unifying together traces, metrics, and logs with system-level insights, OpenTelemetry and eBPF help you turn raw telemetry into meaningful, actionable intelligence.

Instrument your clusters effortlessly with OpenTelemetry’s vendor-neutral SDKs, then layer eBPF-driven network and kernel-level monitoring to catch bottlenecks, reduce overhead, and troubleshoot issues at lightning speed.

Learn more about how we’re making Kubernetes observability simpler, faster, and more powerful with OpenTelemetry and eBPF.

OpenTelemetry has become the industry standard for collecting, processing, and exporting telemetry data, enabling teams to gain deep observability without vendor lock-in. By providing a unified framework for traces, metrics, and logs, it simplifies instrumentation and empowers engineers to build resilient, scalable, and cost-efficient monitoring pipelines.

With a well-structured approach, organizations can standardize telemetry across their stack, reduce operational complexity, and make data-driven decisions that improve performance and reliability.

To dive deeper into OpenTelemetry’s capabilities or contribute to the project, check out the official documentation and GitHub repository.

.png)

.png)